How to Build an Automated Trading System

In Excel, Python, and Build Alpha

It is no secret that many traders fail to achieve success and a level of consistency. The persistent traders will eventually understand they need to quantify what their edge is. This inevitably leads them down the road of systematic or quantitative trading but with no direction on how to begin.

I am often asked how to build an automated trading system or how to create a trading algorithm or become a software trader. In this post, I will walk through testing a simple two rule system for the SP500 using Excel, Python and Build Alpha. The goal is to show how simple investigating quantifiable edges can be.

I will use the same simple trading algo idea in all three platforms to hopefully show some algo trading 101 level steps in each platform. The strategy will buy when IBR (defined later) is less than 20 and the closing price is below the 10 period simple moving average. It will hold for 1 day and will not have any position sizing or risk management. Very simple to create.

We will also create visuals for the equity curve (P&L graph) and drawdown.

How to Create a Trading Algo in Excel

Starting with excel makes the most sense as the majority will be most familiar with it. I will share many screenshots and explain the steps one by one on how to do backtesting in excel. This is a simple example – no risk management stock trading excel example yet. So if you are an excel expert this may feel like a slow walk in the park – that is by design!

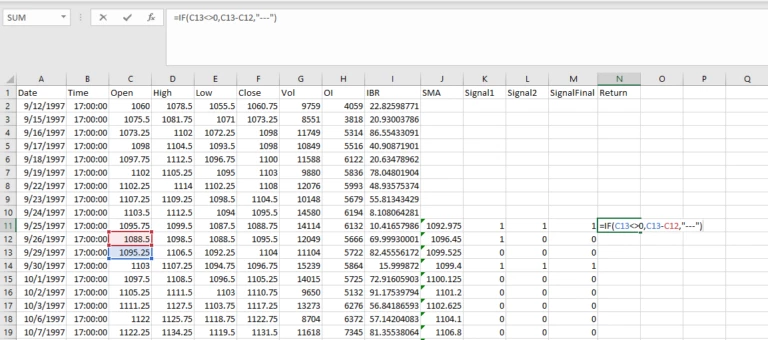

Step 1: Open the data file in Excel. Finance data is almost always going to be displayed in this format of Date, Time, Open, High, Low, Close, Volume (and Open Interest if futures or options). It is often referred to as OHLCV data.

Step 2: Calculate IBR or Interbar Rank. This is sometimes referred to as IBS or Interbar strength. It is a 1 period stochastics where we view where the close is in relation to the bar’s trading range. An IBR of 20% or lower would mean the close was in the lower 20% of the bar’s range.

The formula is simple and can be seen below. It is the difference between the close and the low of the bar divided by the range of the bar. Please note I added 0.0001 to the denominator to avoid any divide by 0 errors when/if the high and low are equal to each other. I have also multiplied IBR by 100 in order to express it as a percent.

After typing in the formula, drag this value down for all rows of data in order to calculate the IBR for each bar.

Step 3: Calculate the 10 period Simple Moving Average. Go down to the 10th bar and add the formula below. Drag this down for all rows of data to calculate the 10SMA for each bar.

Step 4: Let’s add a new column and calculate our 10 period SMA signal. I have named this column Signal1. We will check if the current close is below the current SMA.

In order to check this, we can use an excel if statement. If the close is below the SMA return a 1 else return a 0.

Step 5: Adding another column named Signal2 in order to calculate our IBR signal. Again using an if statement to check if the current IBR is less than or equal to 20%. That is, if the current bar’s IBR is less than 20% return a 1 else return a 0.

Step 6: We need to combine our two signals into one signal. That is, whenever we close below the 10 SMA and have a weak close in the bottom 20% of the day’s range we want to return a 1 otherwise return a 0. A 1 would mean a green light to trade and a 0 would sit us on the sidelines as we have no edge.

All we need to do is check the sum of the previous two columns we created. If their sum is 2 then we return a 1 else we return a 0.

Step 7: Next let’s calculate the raw one day returns on the SP500. We have been using the closing price in all of our signals so we cannot truly know if our signal(s) are true until the close of the bar. Thus we cannot enter until the next bar’s open.

Let’s assume we get a true signal on Monday’s close. We would then enter on Tuesday’s open and hold for one bar exiting on Wednesday’s open. We could exit on Tuesday’s close but for simplicity’s sake we won’t in this example.

Our excel function checks if we have data the next two days by checking if the opening price is non-zero and exists. If we have data, we can then subtract tomorrow’s open from from the open of two days from now. That is, take the difference of Wednesday and Tuesday’s opens per our example and store it in Monday’s row.

This gives us a data column that says, if we have a signal on this bar and buy the next open, hold for one day, what would our return be?

Step 8: Now let’s get our trade returns. If we have an actual signal in our ‘SignalFinal’ column from our two indicators what would our return be.

I also multiplied by 50 to represent the point value of the SP500 emini futures contract. That is, if you buy at 3346 and sell at 3347 then you’ve earned $50 per contract not $1 per contract. Each futures contract has a point value and this one’s is 50.

Step 9: Let’s copy our Date column and Trades column into a new tab. I clicked on the ‘A’ above ‘Date’ to highlight the entire column. I then hold ctrl and click on the ‘O’ above the ‘Trades’ column. This should highlight both columns.

Press ctrl + c to copy. Open a new tab and press ctrl + v to paste.

Step 10: Let’s do some house-keeping to remove the days we did not trade. We first need to sort by our Trade column and delete all days with “—” in the cell.

After deleting non-trade days, I then re-sort by date from oldest to newest.

To sort please highlight the columns, go to the data menu and select sort.

Step 11: Time to create our P&L Graph. We need to add each trade value to a rolling sum of all trade values. The simple formula is below.

Drag this formula down to calculate the equity curve’s value after each trade.

Step 12: In order to calculate the drawdown of our simple trading program we need to first calculate the high watermark or the rolling maximum amount our account would have achieved following this simple trading system.

Once we have the maximum or high watermark, we can then subtract our current P&L from the maximum to determine the current drawdown. I have added new columns for the maximum P&L (MAX) and the Drawdown (DD).

Step 13: Now we can plot our P&L Graph and Drawdown. Simply highlight the column, go to the Insert menu and select a line chart.

How to Build a Trading Algo in Python

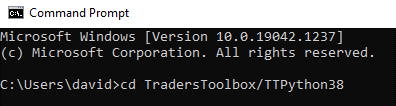

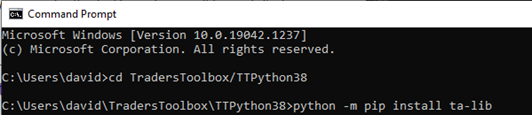

I have done a few other blogs on how to read a text file in python and using pandas but this example will show not only how to read in data, but how to complete all the steps above in one simple python script. The goal is building trading algorithms with python – or at least the first steps.

That way you have a very rudimentary framework for testing automated trading systems and creating trading algos in python. Hopefully this serves as an intro example of how to backtest a trading program or strategy.

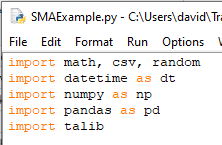

Step 1: Create a new file, import our plotting library and pandas. Matplotlib is arguably the most popular python visualization library.

In the pandas read_csv call I have specified the file I’d like to read in, how to separate my columns, and what column should be the index of my pandas dataframe.

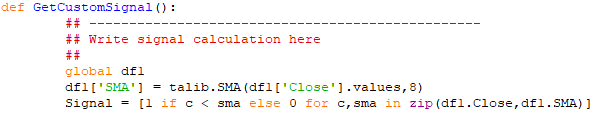

Step 2: Let’s calculate our indicators. To calculate IBR we will use list comprehension which is a very cool python trick. It allows us to do a for loop over our entire dataframe all in one line of code. The calculation is the same as excel and will create a dataframe column named IBR as we had in excel.

We will then use the pandas rolling function to create our 10 period simple moving average (mean). I have saved our moving average values in a new dataframe column named ‘SMA’ similar to excel.

Step 3: Following similar steps as we did in excel, we can convert our indicators into actionable signals using ‘if’ logic. We will use python’s list comprehension feature which allows us to loop through our entire dataframe in a single line of code.

For instance, we will create a new dataframe column named Signal1 which will store either a 1 if IBR is less than 20 or a 0 if it is not less than 20.

Step 4: Similar to excel’s footsteps let’s continue creating our python trading algo by calculating the returns and then mapping our returns and signals into actual trade results.

First, we can use pandas built-in shift function to access tomorrow’s opening price and the opening price from two day’s from now. This is similar to how we calculated returns in excel.

To create the trade results, we need to know if there was a signal or not. We can multiply our SignalF column and our return column. If we have a signal we will have a trading return and if we do not have a signal (0) then the return will get zeroed out. I’ve also multiplied by 50 to account for the SP500 emini future contract’s point value to convert our Trades into actual dollar values for 1 contract.

Step 5: In excel we had to copy and paste our trade returns into a new tab, sort them, remove days we did not trade, etc. In Python we can simply create a new pandas dataframe, named Trades, and filter our original dataframe. We will simply return only the rows of our original dataframe where our signal was equal to 1. That is, only returning the values when we took a trade.

Step 6: To calculate our P&L and drawdown we can use the following two simple lines of code. Since our P&L is simply the cumulative sum of our trades we can easily just call the cumsum function on our Trades column.

In order to calculate the drawdown we can just subtract our current P&L from the rolling cumulative maximum P&L. Python and pandas has a built-in cummax function we can use which will save us from creating an additional column to store our maximum values (high watermarks). This means one less column than excel.

Step 7: Plotting in python is simple. We already imported matplotlib – our plotting library – in the first step of this python walk through. Now we can call the pandas plot function, specify that we want to use subplots, and then display our plot with the show function.

How to Build a Trading Algo in Build Alpha

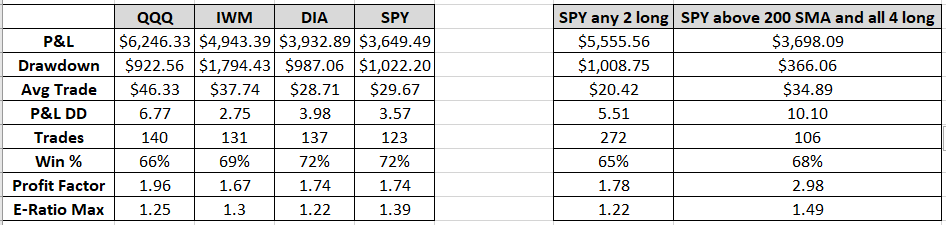

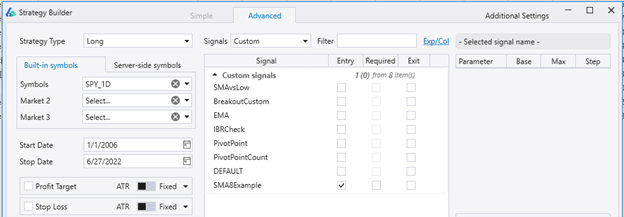

Step 1: Configure Build Alpha’s main screen. Set the symbol to ES which is the symbol for the SP500 emini futures contract.

Set the date range to start in 1997 and end near Sep 2020 to match the same data used in excel and python.

In the lower left set the max holding time to 1 bar in order to again match excel and python.

Step 2: Let’s select our signals. Type IBR into the ‘Filter’ search bar near the top. Scroll down and select the signal IBR <= 20 as an ‘Entry’.

Then type in SMA in the ‘Filter’ search bar near the top. Scroll down until you find the signal Close <= SMA(10). Select this signal as an ‘Entry’.

Then hit Simulate!

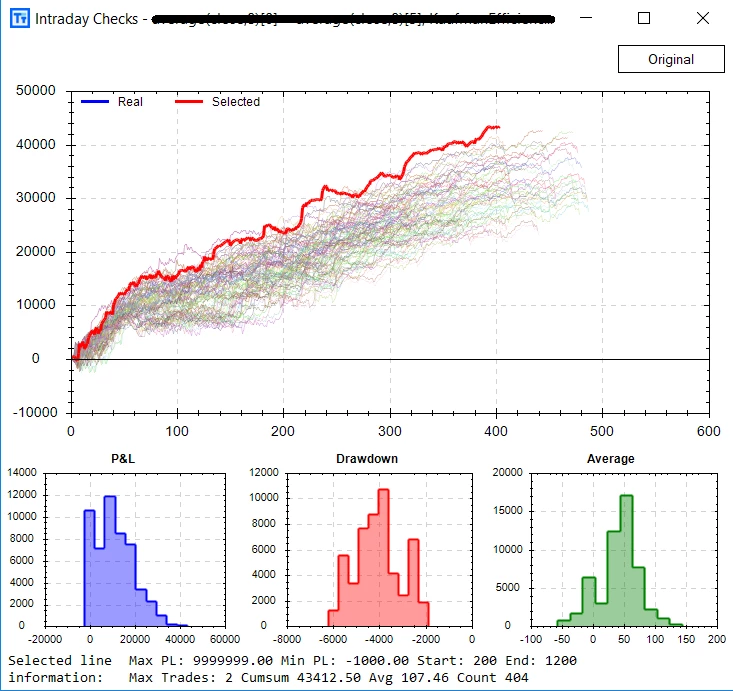

Step 3: In the Results window you will view our two rule strategy at the top (highlighted in blue). Double click on the strategy to view the P&L graph. Toggle drawdown off and on by hitting the drawdown button.

What's Next?

Some logical next questions might be how can I add a stop? How can I add more signals? How can I trade automated strategies after I’ve tested in excel or python? I encourage you to try adding on to this simple trading program idea in both excel and python. Then there can be a greater appreciation for how simple Build Alpha can make things!

Want to add a stop? Click a button. Want to add a signal? Click a button.

I am often asked how to build automated trading systems or how to create automated trading systems in excel or what is the best automated trading software and what software do professional traders use, etc. This blog post and the rest of the Build Alpha blog can answer those questions. The information is out there, the tools are out there.

Trading is not easy, but it is simple. Hunt for edges, collect them, execute them.

If you want access to the python script or the excel sheet please send me an email at david@buildalpha.com.

Other Build Alpha Python Resources

- You can now add custom python signals to Build Alpha’s genetic algorithm. Learn how here: Use Python in Build Alpha

- More python examples: in this blog I import custom data and build a sample strategy with python on $AAPL

- More blogs about robustness testing, python, Build Alpha and strategy development at the Build Alpha blog.

- To learn more about me, head over to the about page at BuildAlpha.com/about

Author

David Bergstrom – the guy behind Build Alpha. I have spent a decade-plus in the professional trading world working as a market maker and quantitative strategy developer at a high frequency trading firm with a Chicago Mercantile Exchange (CME) seat, consulting for Hedge Funds, Commodity Trading Advisors (CTAs), Family Offices and Registered Investment Advisors (RIAs). I am a self-taught programmer utilizing C++, C# and python with a statistics background specializing in data science, machine learning and trading strategy development. I have been featured on Chatwithtraders.com, Bettersystemtrader.com, Desiretotrade.com, Quantocracy, Traderlife.com, Seeitmarket.com, Benzinga, TradeStation, NinjaTrader and more. Most of my experience has led me to a series of repeatable processes to find, create, test and implement algorithmic trading ideas in a robust manner. Build Alpha is the culmination of this process from start to finish. Please reach out to me directly at any time.

![A Strategy For Each Day of the Week [Seriously?]](https://www.buildalpha.com/wp-content/w3-webp/uploads/2018/04/DayOfWeek.pngw3.webp)

![Using Python in Build Alpha [Creating Custom Signals]](https://www.buildalpha.com/wp-content/w3-webp/uploads/2017/12/CustomRule.pngw3.webp)

![Mean Variance Optimization [Portfolio Construction]](https://www.buildalpha.com/wp-content/w3-webp/uploads/2017/05/MV_1.pngw3.webp)